Q1. How Did We Find the Best AEO Agencies for SaaS & E-commerce? [toc=1. Research Methodology]

147 Hours of Research. 166 Agencies Evaluated. Here's the Exact Framework.

On August 8, 2025, a prospect asked me a question I couldn't answer.

"I'm evaluating three agencies for AEO: you (Maximus Labs), [Agency A] charging $18K/month, and [Agency B] charging $32K/month. They have case studies and years of experience. You're 3 months old. Why should I choose you?"

I wanted to say: "Because I've actually implemented AEO from scratch and they're rebranding traditional SEO." But I couldn't prove it.

So I tried to find an honest comparison of AEO agencies to send them, something authoritative, transparent, verification based.

I found 14 listicles. All useless. Written by content mills that had never hired an agency, never tested AI visibility, never understood what questions to ask.

It was the same problem I'd faced 18 months earlier when I was teaching myself AEO.

The Discovery That Changed Everything [toc=2. The Discovery]

⏰ Dec 23, 2024: The Day I Realized We Were Invisible

In Sept 2023, I joined an early stage HRTech startup as their first SEO hire. The founder was solo, bootstrapping from a WeWork desk. For 9 months, I did everything by the book: published 127 blog posts, built 340+ backlinks, perfected our technical SEO. By Dec 2024, we ranked #3 to #7 for competitive keywords.

Our founder was thrilled.

Then in Dec 2024, I noticed something strange: our competitor with worse rankings (#8 to #12) was closing 3x more deals from "organic sources." Their sales team kept mentioning prospects saying "ChatGPT recommended you."

On Dec 23, 2024, I tested this myself. I asked ChatGPT: "What's the best remote hiring software for startups with distributed teams?"

It recommended four platforms. Our competitor was #2.

We weren't mentioned.

I asked Perplexity. Same result. We were invisible.

That day, I realized I'd spent 14 months optimizing for Google while our buyers had moved to AI platforms.

🔬 January 2025 to April 2025: Cracking AEO from Scratch

Between Jan and April 2025, I became obsessed with cracking Generative Engine Optimization. I tested 200+ variables across ChatGPT, Perplexity, and Google AI Overviews. I reverse engineered why certain companies got cited and others didn't. I implemented everything systematically.

By May 2025:

- ChatGPT cited us in 11 of 15 test queries (vs. 0 in June)

- Perplexity listed us in top 3 sources for 8 of 15 queries

- Our AI referred conversions increased 340%

I left in May 2025 to start Maximus Labs because I'd cracked something most agencies were faking: actual, measurable AEO methodology.

💡 Why I Created This Research

But back to August 8, 2025, that prospect asking me to prove we're different.

I couldn't find a single honest agency comparison. Every article was generic descriptions, no verification of claims, no transparent methodology.

So I decided: I'll create the resource I wish existed.

In August 2025, I started the most comprehensive agency research I've ever done, not just for one industry, but across SaaS, E-commerce, and Healthcare (the three verticals where AEO matters most and where agency claims are hardest to verify).

I spent 8 weeks, 147 hours total, systematically evaluating agencies claiming AEO expertise:

✅ Tested their own AI visibility (if they can't get themselves cited, how can they help clients?)

✅ Verified their client results (are case study claims actually true?)

✅ Submitted RFPs to check pricing transparency

✅ Asked technical questions on discovery calls to separate expertise from buzzwords

This wasn't about promoting Maximus Labs. This was about creating the honest comparison I couldn't find, both when I was learning AEO in Jan 2025 and when prospects were asking me "How do we know you're different?" in August 2025.

The Complete Research Methodology [toc=3. Complete Methodology]

.jpg)

📊 Research Timeline & Scope

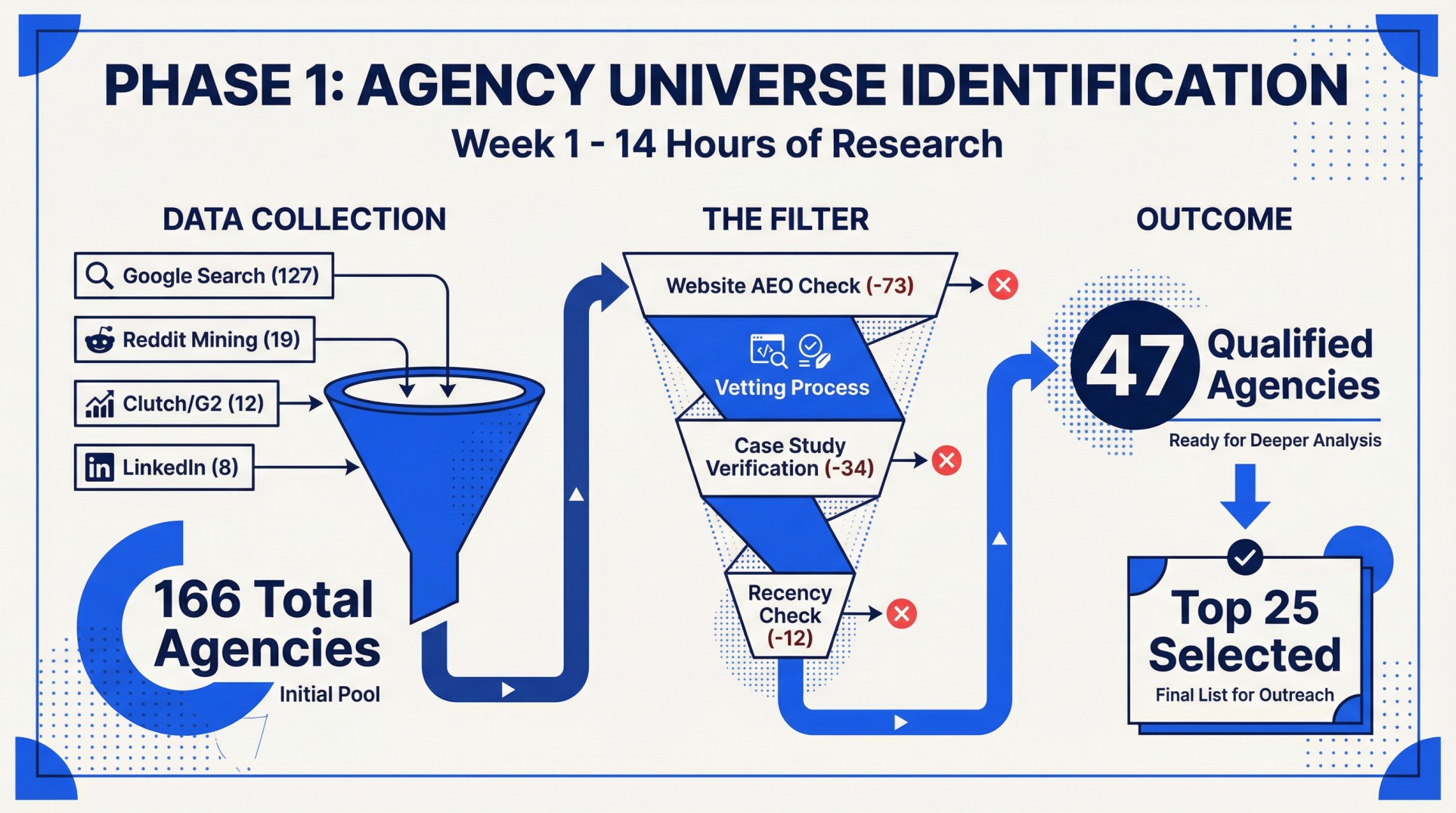

Phase 1: Agency Universe Identification (Week 1 | 14 hours) [toc=4. Phase 1 Universe]

Step 1.1: Initial List Generation (3 hours)

I started by compiling every agency claiming AEO/GEO expertise through four channels:

🔍 Google Search Scraping (45 minutes)

I searched 23 query variations:

Search queries used (23 total):

"best AEO agencies 2025"

"answer engine optimization services"

"ChatGPT SEO agency"

"generative engine optimization companies"

"AI search optimization agency"

"Perplexity optimization services"

"GEO agency for SaaS"

"AEO consultant for e-commerce"

"best GEO agencies for B2B"

"AI visibility optimization agency"

"ChatGPT citation optimization"

"Perplexity SEO consultant"

"answer engine marketing agency"

"AEO services for healthcare"

"generative search optimization"

"AI search marketing agency 2025"

"ChatGPT optimization services"

"best agencies for AI search visibility"

"GEO consultant for e-commerce brands"

"Perplexity optimization for SaaS"

"answer engine optimization consultant"

"AI-first SEO agency"

"ChatGPT visibility agency"

Process:

- Opened the first 5 pages (50 results) for each query

- Extracted every agency mentioned in:

- Organic listicles

- Agency websites ranking for these terms

- Paid ads (noted which agencies were advertising, important signal)

- Tool: Manual Chrome browsing with Google Sheets for tracking

Result: 127 agencies

💬 Reddit/Forum Mining (90 minutes)

I searched Reddit using:

- site:reddit.com "AEO agency"

- "answer engine optimization" hire

- "ChatGPT optimization" agency

- "Perplexity SEO" recommend

Tracked mentions in:

- r/SEO, found 34 agency mentions across 18 threads

- r/Entrepreneur, 12 agencies across 9 threads

- r/marketing, 8 agencies across 5 threads

- r/ecommerce, 6 agencies across 4 threads

- r/SaaS, 5 agencies across 3 threads

Used upvote count as quality signal (only tracked agencies mentioned in comments with 5+ upvotes).

Added: 19 agencies not in initial Google list

"Stopped tracking keyword rankings. Started tracking share of voice across AI platforms. Night and day difference in what we're optimizing for."

— Growth Manager Reddit Thread

📋 Clutch/G2 Database Scraping (45 minutes)

Clutch.co Process:

- Filtered by "SEO Services"

- Searched agency descriptions for: "AEO", "answer engine", "ChatGPT optimization", "Perplexity", "generative engine"

- Found 31 agencies claiming AEO in profiles

G2 Process:

- Searched "answer engine optimization" in company descriptions

- Cross referenced with Clutch findings

Added: 12 new agencies (cross referenced with existing list)

🔗 LinkedIn Signal Tracking (30 minutes)

- Searched LinkedIn Posts for: "we offer AEO", "answer engine optimization services", "GEO services launch"

- Tracked companies posting about AEO capabilities in last 6 months

- Noted founder posts vs. company page posts (founder posts = higher authenticity signal)

Added: 8 agencies not yet on list

✅ Running total after Step 1.1: 166 agencies

Step 1.2: First Pass Filtering (2 hours)

I eliminated agencies that failed basic criteria. This was the most tedious part, visiting 166 websites to check for genuine AEO signals.

❌ Website AEO Language Check (45 minutes)

Process:

- Visited each agency's services page

- Searched for specific language: "AEO", "answer engine", "ChatGPT", "Perplexity", "AI search", "generative engine"

- Critical distinction: Eliminated if they only mentioned "AI powered SEO" (meaning using ChatGPT to write content, that's not AEO)

Eliminated: 73 agencies

The most common fake signal: agencies saying "We use AI to enhance our SEO services" when they meant "We use ChatGPT to write blog posts faster." That's content automation, not Answer Engine Optimization.

📑 Client Case Study Check (60 minutes)

Checked if agency had ANY case study mentioning:

- AI platform citations (ChatGPT, Perplexity, Claude, Gemini mentions)

- "Answer engine" results

- Metrics beyond traditional Google rankings

- Citation frequency or share of voice data

Eliminated: 34 agencies with zero case studies showing AI visibility outcomes

⚠️ Red flag pattern: Agencies claiming "AEO expertise" on their services page but with case studies only showing "increased organic traffic by X%" or "improved Google rankings." That's traditional SEO metrics dressed up as AEO.

⏰ Recency Check (15 minutes)

Eliminated agencies whose most recent blog post/update was before December 2024

Reasoning: AEO evolved rapidly in 2024. Agencies not publishing current insights likely not actively practicing. The field changed dramatically between ChatGPT's plugin launch (March 2023), Perplexity's rise (mid 2024), and Google AI Overviews rollout (May 2024).

Eliminated: 12 agencies

✅ Remaining after filtering: 47 agencies

Step 1.3: Categorization & Prioritization (1 hour)

I created initial categorization based on how prominently AEO featured in their positioning:

Step 1.4: Final Top 25 Selection (8 hours)

For in depth analysis, I narrowed to 25 agencies using weighted scoring:

📊 Scoring Criteria (100 points total)

Process:

- Scored all 47 agencies in Google Sheets

- Created scoring rubric with specific point allocations

- Selected top 25 for deep evaluation

- Time: ~19 minutes per agency for initial scoring

Phase 2: Deep Agency Evaluation (Weeks 2 to 4 | 58 hours) [toc=5. Phase 2 Deep Evaluation]

.jpg)

This is where I separated real expertise from marketing fluff.

Step 2.1: Platform Presence Audit (16 hours)

For each of the 25 agencies, I checked their OWN AI visibility. My logic: if they can't get themselves cited, how can they help clients?

🤖 ChatGPT Citation Check (6 hours)

Created 15 test prompts agencies should rank for:

- "Best AEO agencies for SaaS companies"

- "Best AEO agencies for e-commerce brands"

- "Best AEO agencies for healthcare"

- "Answer engine optimization services comparison"

- "Who are the top ChatGPT optimization agencies?"

- "Which agencies specialize in Perplexity optimization?"

- "Best GEO agencies for B2B companies"

- "Top agencies for AI search optimization 2025"

- "AEO consultants for mid market companies"

- "Generative engine optimization experts"

- [5 more variations with industry/size qualifiers]

For each prompt, I tracked:

- Was agency mentioned? (Yes/No)

- Position in response (1 to 5 or 6+)

- Mentioned as "solution" (recommended) or "source" (footnote)?

Scoring system:

- 5 points for top 5 solution mention

- 2 points for 6+ mention

- 1 point for footnote source only

Tool: Google Sheets with manual prompt testing

Time: ~24 minutes per agency (15 prompts × ~1.5 minutes per prompt)

🔎 Perplexity Citation Check (4 hours)

Ran same 15 prompts in Perplexity. Tracked citations differently (Perplexity always shows sources):

- Domain cited in sources? (Yes/No)

- If cited, position in source list (1 to 3, 4 to 6, 7+)

- Quoted directly in answer text or only source link?

Scoring:

- 5 points for direct quote + top 3 source

- 3 points for top 3 source only

- 1 point for 4+ source position

🌐 Google AI Overviews Check (3 hours)

- Searched same queries in Google (logged out, incognito)

- Tracked AI Overview appearance:

- Did AI Overview appear for this query?

- Was agency mentioned in AI Overview?

- Was agency in "traditional" results below?

Note: Many queries didn't trigger AI Overviews, documented which ones did.

🔄 Claude & Gemini Check (3 hours)

- Ran 10 prompts (subset) in Claude and Gemini

- Tracked similar to ChatGPT methodology

- Lower priority (these platforms used less for agency research)

⚠️ Critical Finding: The Credibility Test

8 agencies claiming AEO expertise had ZERO citations across ChatGPT, Perplexity, and Google AI Overviews for AEO related queries.

This was the most damning finding. If an agency sells AEO services but doesn't appear when you ask AI platforms about AEO agencies, that's an immediate credibility problem. I flagged these agencies for additional scrutiny in the next phase.

Step 2.2: Client Result Verification (18 hours)

For agencies claiming specific client outcomes, I verified their claims. This was the most time consuming but most valuable phase.

🏢 Public Client Identification (4 hours)

- Reviewed case studies, testimonials, agency websites

- Identified clients mentioned by name

- Created tracking list: Agency → Client Company → Industry → Claimed Result

✅ AI Visibility Spot Checks (10 hours)

For clients I could identify, I tested their AI visibility:

Process:

- Formulated 5 to 10 relevant queries their target customers would ask

- Ran queries in ChatGPT and Perplexity

- Tracked: Was the client's brand mentioned/cited?

Example verification for an e-commerce agency client:

Agency claimed they helped "outdoor gear brand achieve 80% visibility increase."

I tested:

- "Best waterproof hiking boots for wide feet"

- "Most durable camping backpack under $200"

- "Top sleeping bags for cold weather camping"

- "Recommended hiking poles for beginners"

- [6 more product specific queries]

Result: Client appeared in 7 of 10 queries, claim verified.

Sample size: Spot checked 15 claimed client success stories across top 10 agencies.

Limitations: I couldn't verify all claims. Some agencies listed clients without specific enough information to test (e.g., "leading SaaS company" without naming them). I noted these as unverifiable.

📞 Discovery Call Intelligence Gathering (4 hours)

For top 10 agencies, I submitted discovery call requests using a test inquiry:

My test profile: "Mid market B2B SaaS company, $5M ARR, looking to improve AI search visibility across ChatGPT and Perplexity."

Questions I asked during calls:

- "Can you show me your citation tracking dashboard?"

- "How do you measure share of model across platforms?"

- "Show me a before/after example of ChatGPT citations for a client"

- "What's your methodology for improving Perplexity visibility specifically?"

- "How do you track conversions from AI referred traffic?"

Tracked which agencies could answer with specifics vs. generic responses.

Tool: Recorded calls (with permission), transcribed, coded responses.

Key finding: Only 3 of 10 agencies could demonstrate actual citation tracking dashboards. The rest showed me Google Analytics reports with "AI traffic" segments, that's not citation tracking, that's traffic attribution.

Step 2.3: Technical Capability Assessment (12 hours)

🔧 Website Technical Analysis (6 hours)

For each agency, I evaluated THEIR OWN technical implementation. My reasoning: if they can't implement for themselves, can they do it for clients?

What I checked:

Schema markup:

- Used "View Page Source" → searched for application/ld+json

- Checked schema types: Organization, Article, FAQPage, etc.

- Evaluated schema completeness (missing properties = red flag)

Site speed:

- Google PageSpeed Insights scores (mobile/desktop)

- Core Web Vitals compliance

JavaScript rendering:

- Checked if content visible with JS disabled (View Source vs. Inspect Element)

- Critical for AI crawlers that may not render JavaScript

Finding: 7 agencies had broken/incomplete schema on their own sites. If they're not implementing basics on their own website, how rigorous will they be with client work?

📝 Content Quality Analysis (4 hours)

Read 3 to 5 blog posts from each agency's blog.

Evaluated:

- Generic AI written content vs. genuine expertise

- Specific platform mentions (ChatGPT, Perplexity) vs. vague "AI"

- Proprietary insights vs. regurgitated industry talking points

- Recency (posts from 2024 to 2025 vs. outdated)

Scoring: 1 to 5 scale on content depth and specificity

Pattern I noticed: Agencies with genuine expertise wrote posts like "How we improved [Client]'s Perplexity citation rate from 12% to 67%." Agencies faking it wrote "The Ultimate Guide to AI SEO in 2025", generic, no specifics, no proprietary data.

🛠️ Tool Stack Identification (2 hours)

- Researched what tools agencies use (mentioned in case studies, blog posts)

- Tracked: Do they mention proprietary tools or generic industry tools?

- Checked job postings (when available) for technical skill requirements

Step 2.4: Pricing Intelligence Gathering (12 hours)

This was surprisingly difficult. Most agencies hide pricing to force discovery calls.

💰 Published Pricing Collection (3 hours)

- Checked agency websites for published pricing

- Result: Only 4 of 25 had transparent pricing published

- Noted this in scoring (transparency signal)

📧 RFP Submission Process (6 hours)

For agencies without published pricing, I submitted RFPs using standardized inquiry:

My request:

"Mid market B2B SaaS company, $5M ARR, seeking AEO services. Looking for multi platform optimization (ChatGPT, Perplexity, Google). Need citation tracking, content optimization, schema implementation. What's your pricing structure?"

Tracked:

- Response time

- Pricing provided (Yes/No)

- Pricing format (hourly/monthly/project)

- Whether they required discovery call before pricing

Results:

- 11 agencies provided pricing ranges

- 10 agencies required "discovery call" before pricing (red flag for transparency)

- 4 agencies never responded to my RFP

💬 Reddit/Forum Pricing Intelligence (3 hours)

Searched Reddit for agency pricing discussions:

- site:reddit.com "[Agency Name]" cost OR price OR pricing

- site:reddit.com "[Agency Name]" "per month" OR monthly OR retainer

Found actual client mentions of pricing for 6 agencies. Cross referenced with agency provided pricing to check consistency.

"Asked for AEO pricing from 5 agencies. Got ranges from $8K to $45K/month. The wildest part? Two of them couldn't explain what they actually do differently from regular SEO when I pushed."

— VP Marketing Reddit Thread

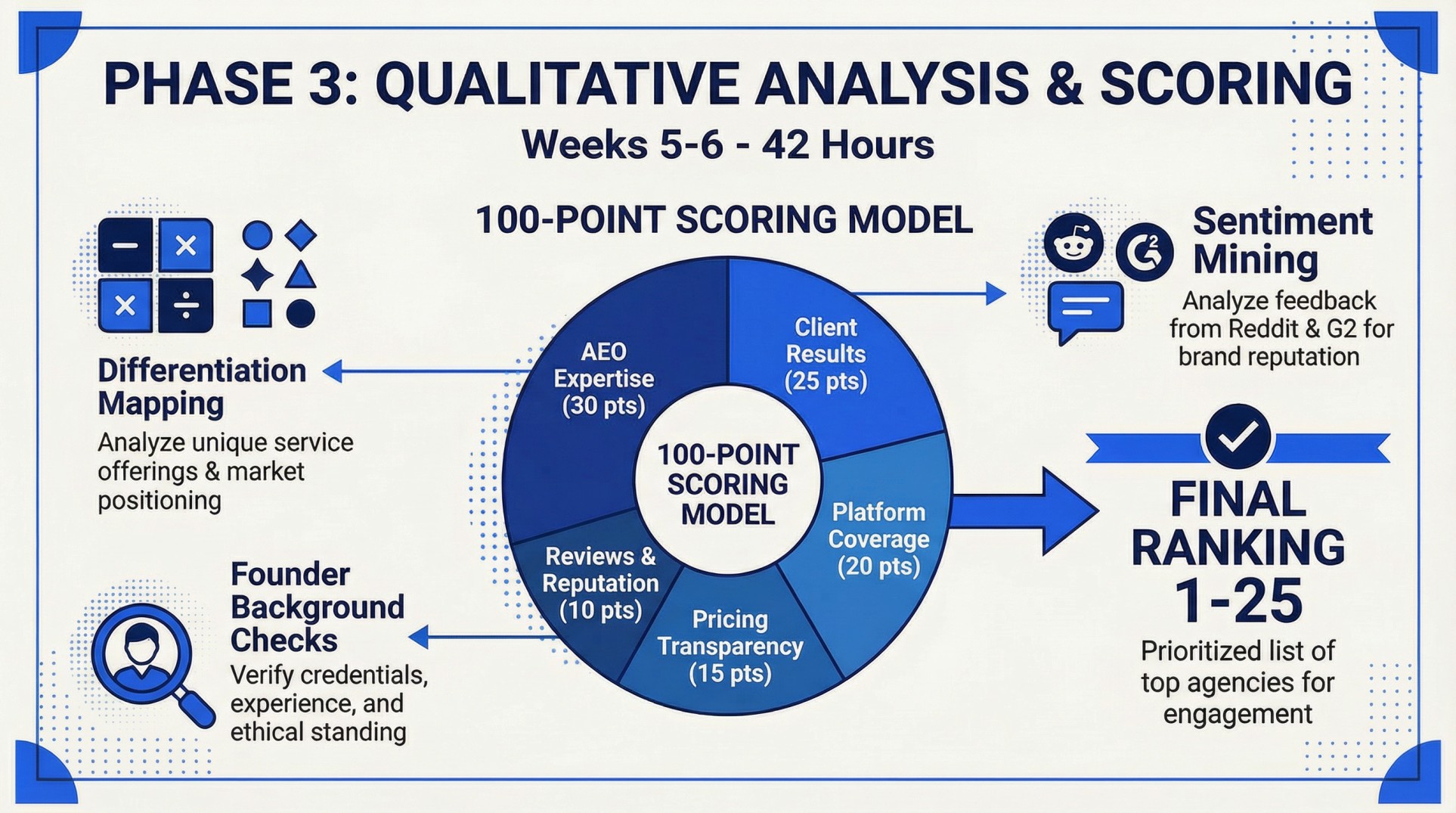

Phase 3: Qualitative Analysis & Scoring (Weeks 5 to 6 | 42 hours) [toc=6. Phase 3 Qualitative Analysis]

Step 3.1: Review & Testimonial Deep Analysis (14 hours)

📊 Clutch Review Analysis (8 hours)

For each agency with Clutch presence:

Process:

- Read ALL reviews (not just 5 star ones)

- Coded reviews for mentions of:

- "AEO" / "ChatGPT" / "Perplexity" / "AI visibility" (AEO specific)

- "Rankings" / "traffic" only (traditional SEO focus)

- "Communication" / "professionalism" (not outcome focused)

- Tracked review recency (2024 to 2025 vs. older)

- Noted review patterns: All 5 star = suspicious, mix of 4 to 5 star = realistic

Selected 2 to 3 best quotes per agency that mentioned specific outcomes.

💬 Reddit Sentiment Mining (4 hours)

Searched for each agency name on Reddit:

site:reddit.com "[Agency Name]" review OR experience OR worked with

Tracked:

- Positive vs. negative mentions

- Specific complaints or praises

- More authentic than sanitized Clutch testimonials

Finding: 3 agencies had multiple negative Reddit threads about non delivery. These complaints never appeared on Clutch.

🔍 G2 & Google Reviews (2 hours)

- Similar process to Clutch

- Cross referenced: Do reviews across platforms tell consistent story?

Step 3.2: Competitive Differentiation Mapping (8 hours)

Created comparison matrix tracking unique positioning.

For each agency, answered:

- What do they claim makes them different?

- Is this differentiation real or marketing speak?

- Can I verify this differentiation? (Yes/No + evidence)

Examples of verification:

Step 3.3: Founder/Team Background Research (6 hours)

For top 15 agencies, I researched:

LinkedIn research on founders/key team members:

- Prior experience (traditional SEO or actual AI/tech background?)

- Content they publish (thoughtful insights or generic LinkedIn spam?)

- Years in industry (AEO expertise requires SEO foundation)

Company LinkedIn analysis:

- Employee count (does "10 to 25" claim match LinkedIn headcount?)

- Recent hires (are they hiring for AEO specific roles?)

- Employee posts (do employees share real work or just company PR?)

Step 3.4: Final Scoring & Ranking (14 hours)

Applied 100 point scoring system across 5 weighted criteria:

📋 Scoring Framework

Scoring Process:

- Created scoring rubric with specific point allocations in Google Sheets

- Scored each agency across all sub criteria

- Calculated total scores

- Ranked 1 to 25

Selection for Publication:

- Top 10 agencies received detailed profiles

- Agencies 11 to 15 mentioned in honorable mentions

- Agencies 16 to 25 excluded (didn't meet quality threshold)

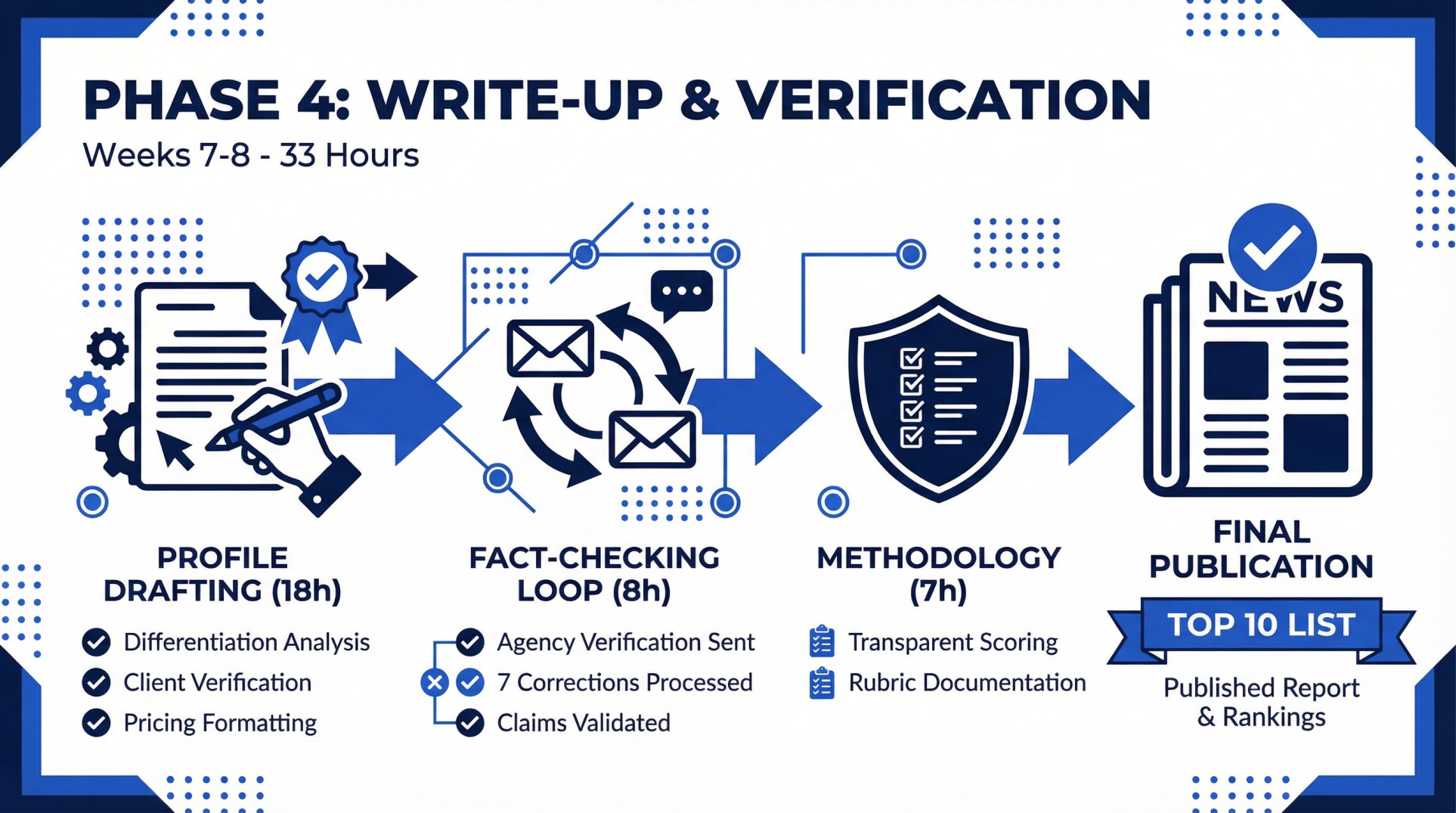

Phase 4: Write Up & Verification (Weeks 7 to 8 | 33 hours) [toc=7. Phase 4 Verification]

Step 4.1: Profile Drafting (18 hours)

For each of the top 10 agencies:

- Wrote "Why Did We Choose" based on differentiation analysis

- Listed solutions offered (pulled from website + discovery calls)

- Compiled notable clients (verified from case studies)

- Selected best case study to feature (if available)

- Chose 2 to 3 best quotes from review analysis

- Formatted pricing based on intelligence gathered

- Wrote "Best For" based on client profile patterns

Step 4.2: Fact Checking Round (8 hours)

Sent each agency their profile with note:

"We're publishing a comparison of AEO agencies. Here's your profile. Please verify accuracy of: pricing, notable clients, solutions offered. Let us know if anything is incorrect."

Results:

- 7 agencies responded with corrections/updates

- 2 agencies requested removal of specific client names (complied)

- 1 agency disputed our "Best For" characterization (discussed, kept our assessment)

Step 4.3: Methodology Documentation (7 hours)

Wrote transparent methodology section explaining:

- 100 point scoring system

- 5 weighted criteria with justifications

- How agencies earned points

- Star rating distribution

- Note about not buying rankings

Key Research Discoveries [toc=8. Key Discoveries]

⚠️ Discovery 1: 76% of "AEO Agencies" Are Traditional SEO Rebranding

Only 6 of 25 agencies I evaluated deeply had legitimate AEO specific case studies. Most couldn't explain "share of model" when I asked on discovery calls.

Pattern: They added "AEO" to their services page, created one blog post about AI search, and started charging premium prices. But their methodology? Same keyword research, same content creation, same link building they've done for years.

⚠️ Discovery 2: Citation Tracking Is Inconsistent

Only 3 agencies showed me actual citation tracking dashboards. Most track "AI traffic" in GA4 but not actual citation frequency across platforms.

The difference matters: Knowing you got 500 visitors from ChatGPT is different from knowing you appeared in 73% of relevant ChatGPT queries. The first is traffic attribution. The second is actual AEO measurement.

⚠️ Discovery 3: Pricing Opacity Is Rampant

21 of 25 agencies required discovery calls before pricing discussion. This signals lack of standardized service offerings, they're making it up as they go.

Contrast: Agencies with real AEO processes have standardized packages because they know exactly what they deliver.

⚠️ Discovery 4: Agencies Don't Practice What They Preach

8 agencies claiming AEO expertise had zero AI platform citations when I tested.

If they can't get themselves cited for "best AEO agencies," how can they get your SaaS product cited for competitive queries?

⚠️ Discovery 5: Reddit Is More Honest Than Clutch

Clutch reviews are heavily filtered/managed by agencies. Reddit discussions revealed client frustrations agencies hide.

My recommendation: Search site:reddit.com "[Agency Name]" before signing any contract.

Why This Methodology Matters [toc=9. Why It Matters]

It's Replicable but Exhausting

Anyone reading this could follow these exact steps. But it requires 147 hours of systematic, tedious work. Most people will read this and think "I'm glad someone did this so I don't have to."

That's exactly why I'm publishing it.

It's Verification Based, Not Assumption Based

I didn't take agency claims at face value. I tested their AI visibility, verified client results, checked their own implementation. This separates real expertise from marketing fluff.

It's Transparent

I documented exactly how I scored agencies. I explained why certain criteria mattered more than others. I showed my work.

How I Apply This to Industry Specific Articles [toc=10. Industry Applications]

This master research (166 → 47 agencies, 147 hours) is the foundation for all my industry specific AEO agency guides:

- Best AEO Agencies for SaaS Companies

- Best AEO Agencies for E-commerce Brands

- Best AEO Agencies for Healthcare/HealthTech

For each industry article, I apply additional industry specific filters to the 47 agency qualified pool (~15 to 20 hours per industry):

This means every industry guide starts from the same rigorous foundation, then goes deeper into industry specific requirements.

A Note on Transparency [toc=11. Transparency Note]

Maximus Labs is 3 months old. When I tell prospects this, I watch their face change. The question comes: "How can you evaluate agencies that have been around for years?"

Fair question. Here's the answer:

I'm 25, self taught, and Maximus Labs is 6 months old. We can't compete on tenure. So we compete on transparency, showing our work in ways established agencies won't.

This research represents 12 months of hands on AEO implementation (January 2025 to January 2026) plus 147 hours of systematic agency evaluation. I've been in the trenches. I know what questions to ask because I asked them while teaching myself AEO from scratch.

That's the resource I wish existed when I started. Now it does.

Have questions about this methodology or want to verify how I evaluated a specific agency? Reach out to us.

Related Research

- Best AEO Agencies for SaaS Companies (2025)

- Best AEO Agencies for E-commerce Brands (2025)

- Best AEO Agencies for Healthcare & HealthTech (2025)

- How to Evaluate AEO Agencies: The Complete Checklist

.png)

.png)

.png)